Drawing from Life in Extended Reality

Collaborative Research: HCC: Small: RUI: Drawing from Life in Extended Reality: Advancing and Teaching Cross-Reality User Interfaces for Observational 3D Sketching

Overview

Daniel Keefe (University of Minnesota)

NSF Award #2326998

Bret Jackson (Macalester College)

NSF Award #2326999

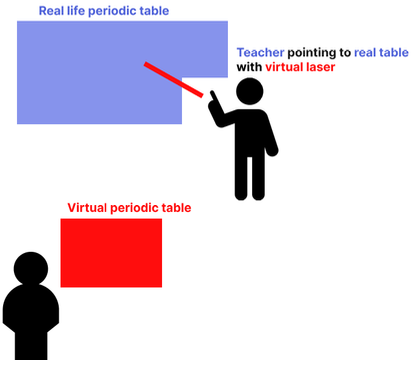

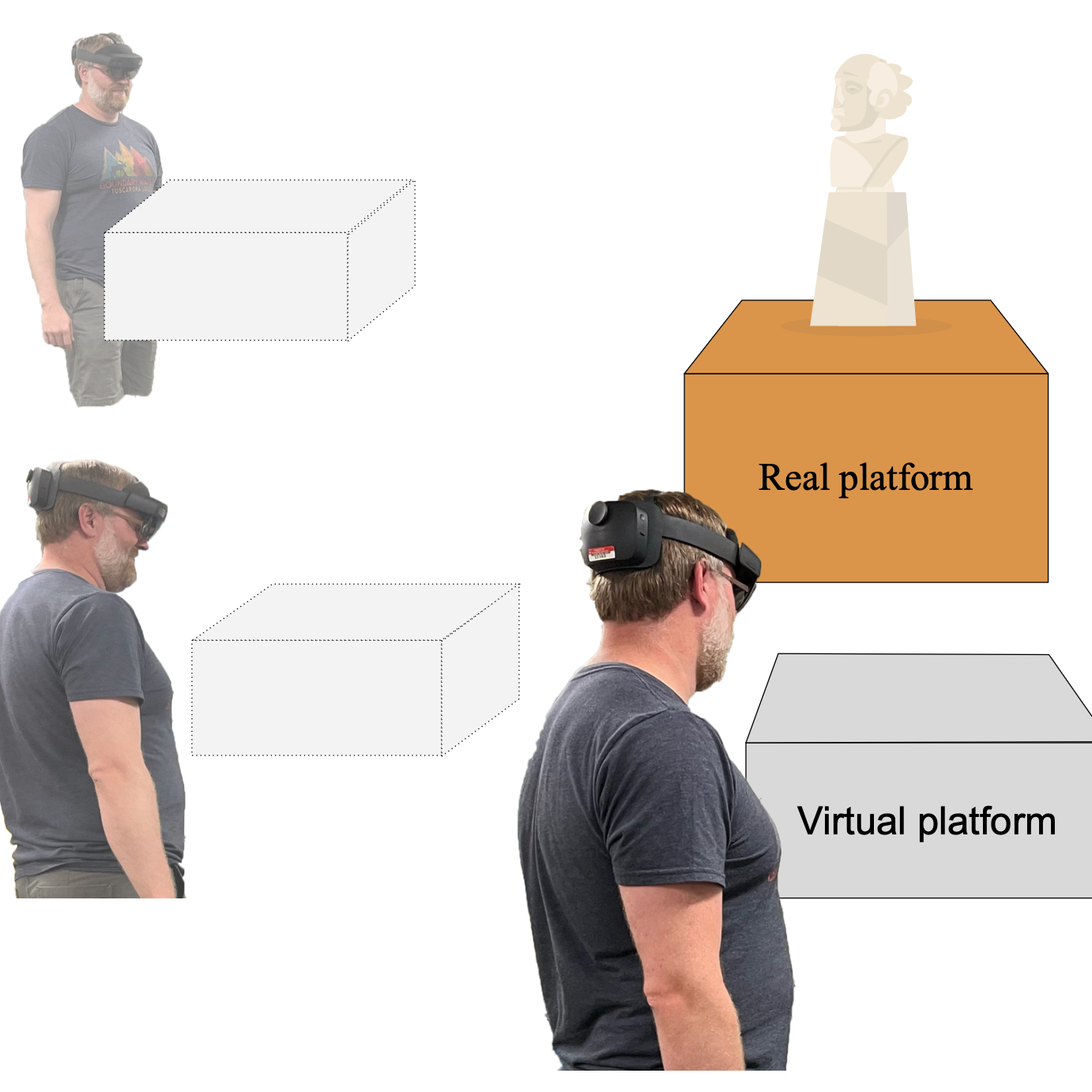

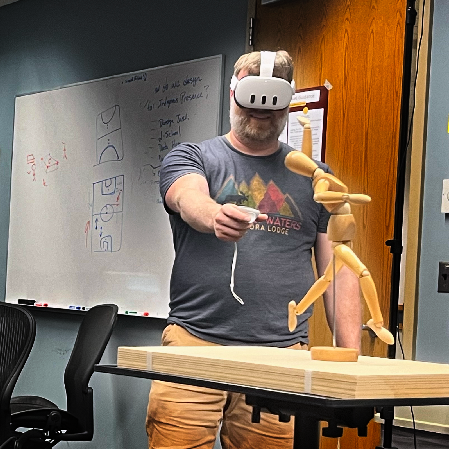

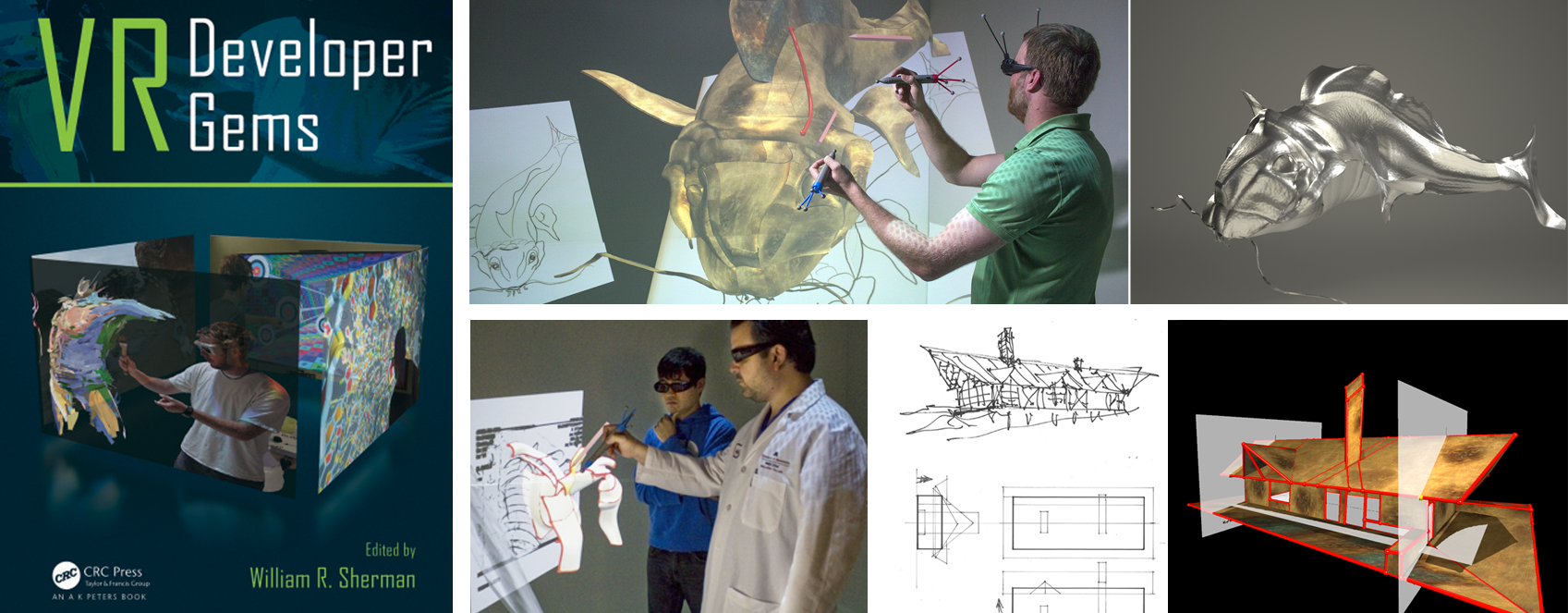

This project advances the science of how people work effectively and creatively within future computing environments that span the real world, virtual reality (VR), and mixed digital+physical spaces in between. Since drawing is fundamental to creative work across many disciplines (e.g., product design, architecture, engineering, art), the investigators focus on the emerging medium of 3D drawing, a form of computer-aided drawing where sweeping movements of a 3D-tracked pen are used to create virtual 3D drawings that appear to float in the air. In the hands of artists, architects, product designers, engineers, and others, virtual reality 3D drawing technologies have already led to exciting new design processes and products. However, despite some early successes and the great potential, 3D drawing, like so many other early applications of VR, remains limited when compared to the physical, real-world counterpart of traditional drawing. Take, for example, the way artists and product designers are taught to draw; learning to draw in the real world starts with learning to see, i.e., making careful observations of a subject. For advanced drawers, real-world drawing from observation is even a way to study a subject, a mode of inquiry. Yet, creative work in virtual environments takes the opposite approach, users have powerful tools and an endless 3D canvas, but no means of connecting these to their notes, sketches, models, experiments, and other real-world artifacts. This project presents a new vision for 3D drawing that makes it applicable to impactful work with real-world subjects (e.g., scientists working in the laboratory or the field, engineers conducting a design review, artists working for social change). The key approach is to re-center 3D drawing, and other creative tasks typically performed in virtual environments, on the foundational skill of careful observation. Specifically, the project develops new Augmented- and Mixed-Reality (AR and MR) tools for making observations from multiple perspectives and using those observations to create 3D drawings. The success of these foundational tools is assessed in lab-based user studies. The integrated educational program creates college courses and educational materials to train students in these new cross-reality modes of working and build capacity among potential 3D drawing instructors for teaching this new style of observational 3D drawing in AR. Undergraduate students in both computer science and art/design disciplines are trained as key members of the interdisciplinary research team.

Prior research on 3D drawing has made 3D pens more controllable and led to many exciting software tools, but none of them help people to work across realities to draw what they see. This project creates novel user interface techniques and viewpoint entropy algorithms for making observations (e.g., judging relative proportion, angles, and negative space) by identifying, visualizing, and navigating the most useful viewpoints for observations of a real-world subject. It also develops spatial user interfaces for translating observations into hand-drawn 3D forms with hybrid 2D+3D sketching interfaces and computer graphics sketch-based 3D modeling algorithms. The deep focus on real-world observation makes the proposed techniques ideal examples of emerging research on cross-reality user interfaces that support seamless transitions between reality, virtuality, and mixed spaces in between. The project includes a tightly integrated educational plan, and the novel systems developed will be deployed in classrooms at multiple institutions. The success and potential of the new technologies and educational modules developed will be assessed through a combination of lab- and classroom-based user studies. This research effort will open the door to using 3D drawing as a mode of inquiry to leverage drawing's expressive power for representing the world around us.

Teaching 3D Drawing

CSCI-8605: 3D Drawing in eXtended Reality -- Syllabus, schedule, and links to readings and other materials are available online. Next taught in Spring 2026!

The project is creating new curriculum for teaching 3D drawing in a hybrid studio/lecture format. The new course taught first in Spring 2025 attracted students from the Departments of Computer Science, Electrical Engineering, Art, Graphic Design, and Architecture.

Technical Advances

The technical advances in the project focus on two themes:

- Identifying, Visualizing, and Navigating Useful Multi-perspective Observations

- Creating Strategies and Interfaces for Drawing what You See

So far, the results include:

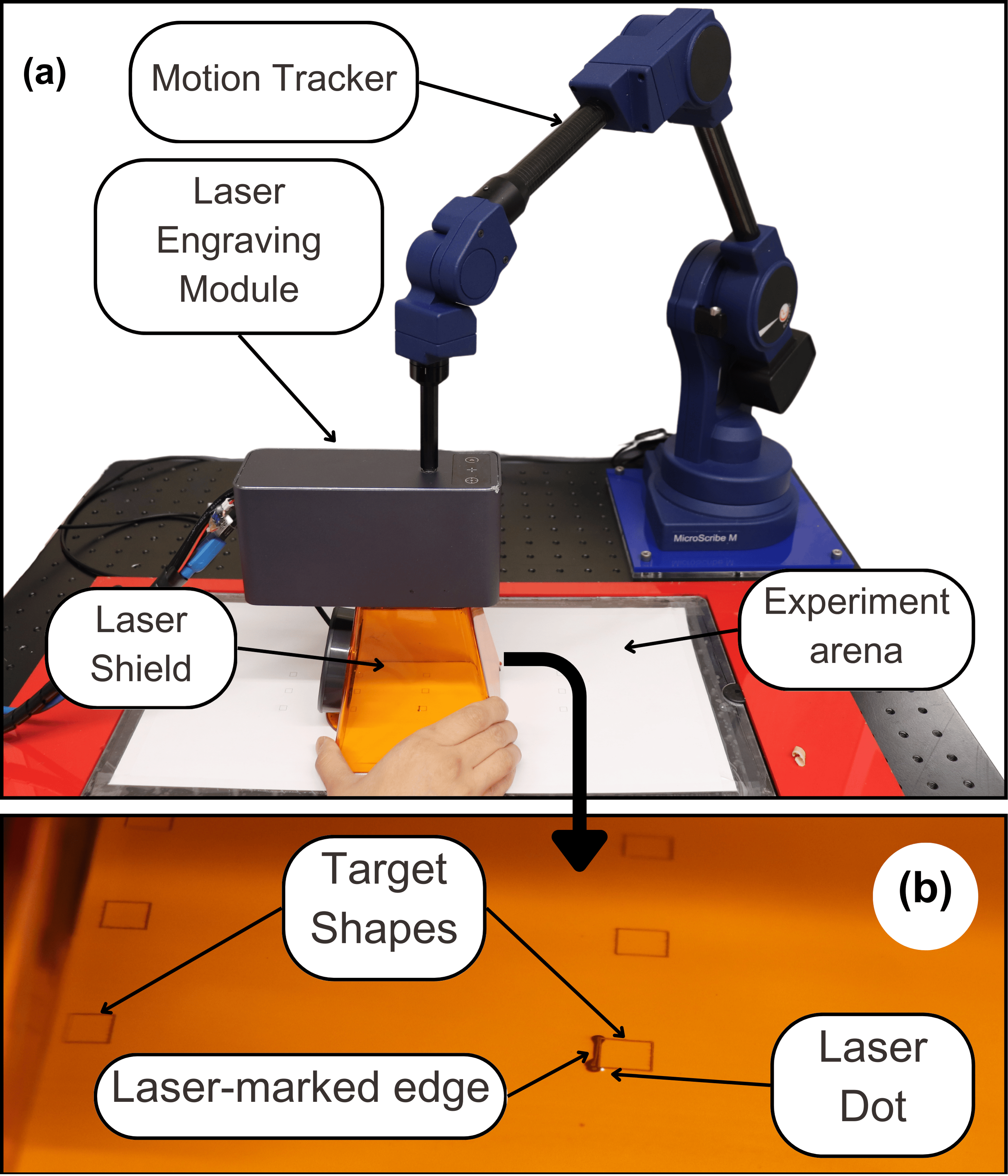

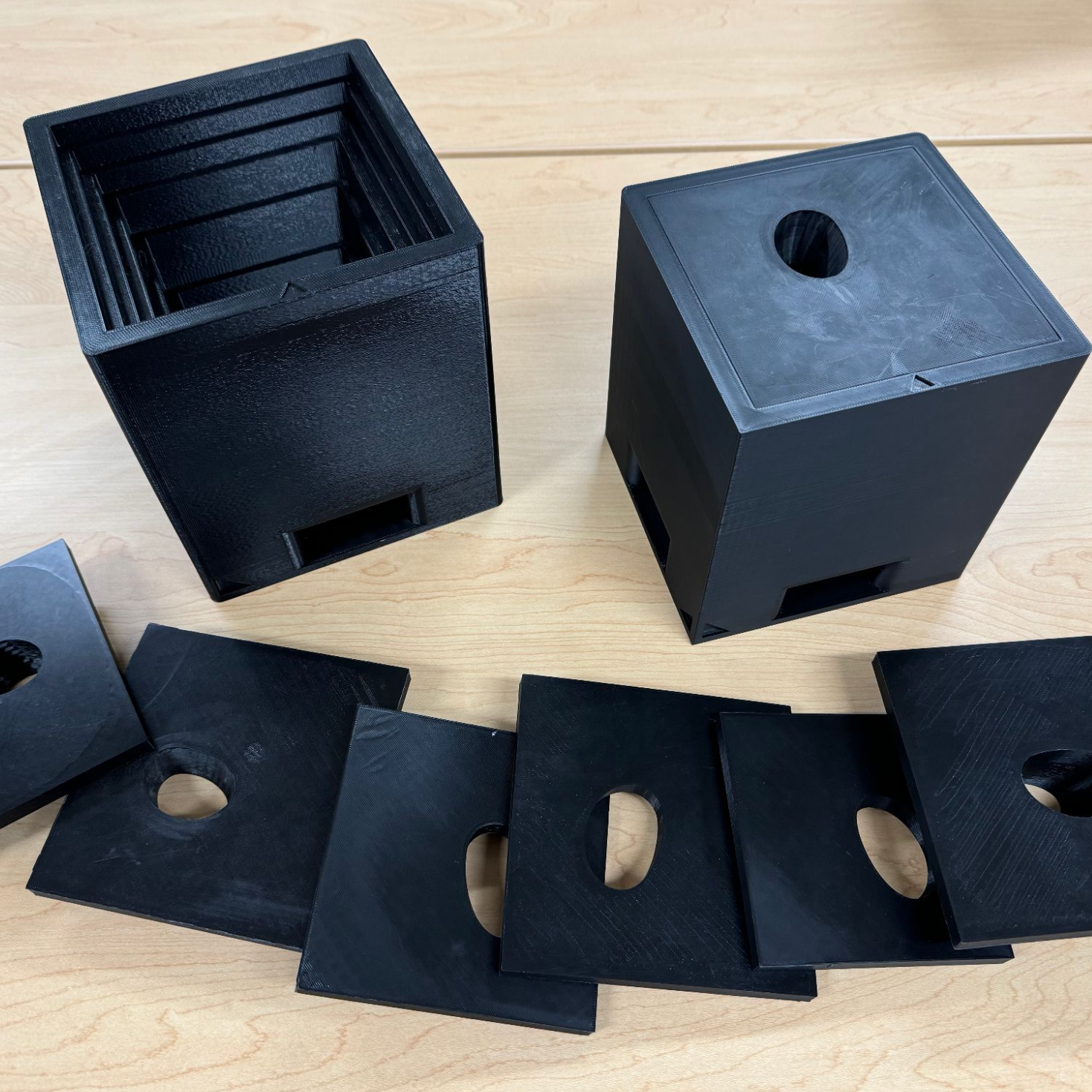

- Mixed reality base 3D drawing software and hardware infrastructure.

- Novel user interfaces for cross-reality multiview sighting of points-of-interest.

- Fast, classroom-ready calibration techniques for fleets of dozens of MR headsets.

- Automatic best-viewpoint selection with viewpoint entropy.

- 3D Drawing Curriculum and First Course Taught

- First Implementation of Animated Cross-Reality Transitions

- Observation Frame 3D Widgets with 2D+3D Curve Editing UI

- Method for quantitative evaluation of the novel sighting user interface.

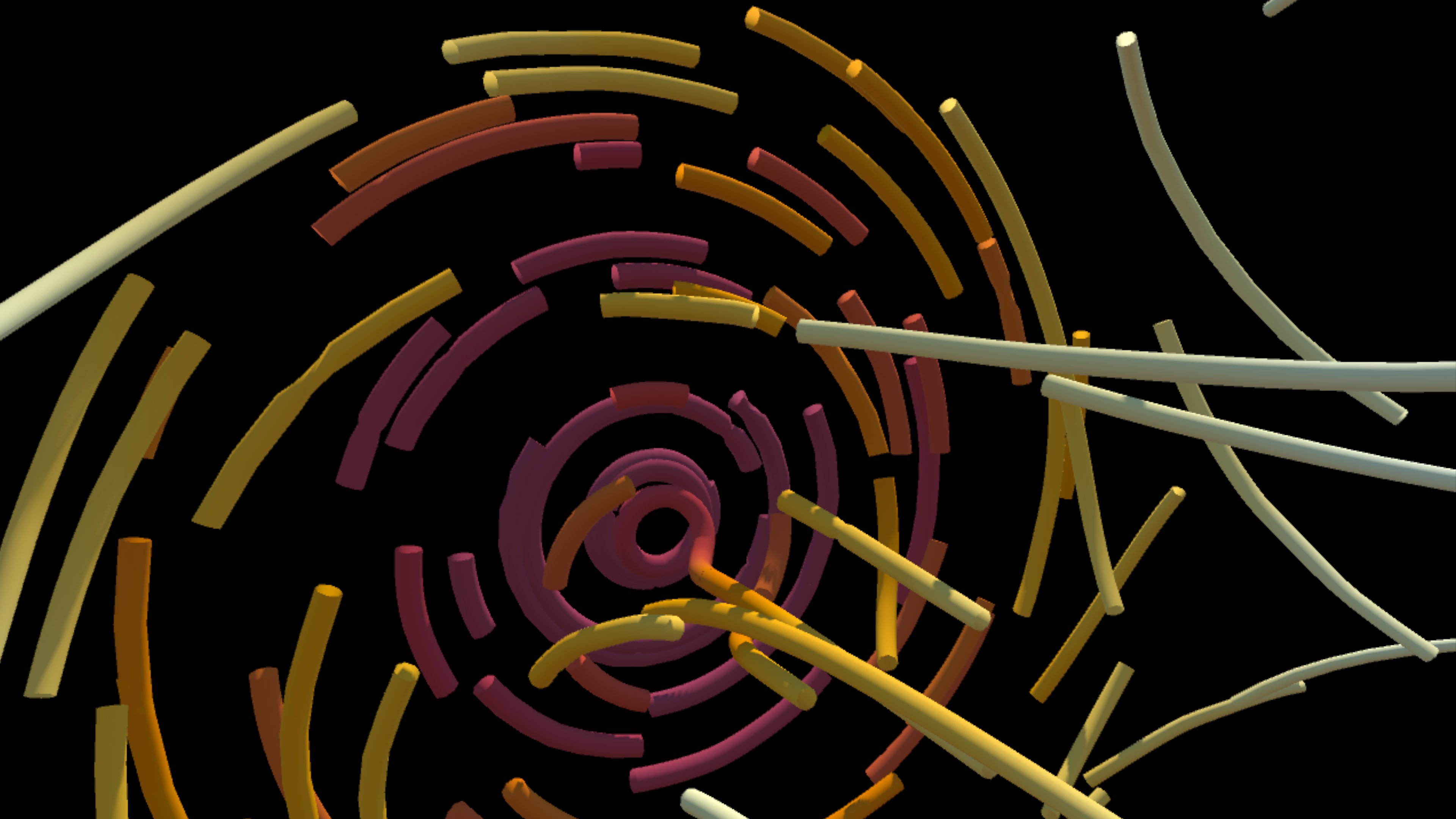

- 3D Drawing User Interface for Data Visualization

Stay tuned for publications describing these results and more.

Summer 2025 REU Team

Lucy Manalang

Macalester College

Huzaifa Mohammad

Macalester College

Summer 2024 REU Team

Tenzin Dayoe

Macalester College

Tu Tran

Macalester College